本文最后更新于:2024年5月7日 下午

优化器是神经网络根据网络反向传播的梯度信息来更新网络的参数,以起到降低loss函数计算值,使得模型输出更加接近真实标签。

参考 深入浅出PyTorch ,系统补齐基础知识。

本节目录

- 了解PyTorch的优化器

- 学会使用PyTorch提供的优化器进行优化

- 优化器的属性和构造

- 优化器的对比

简介

深度学习的目标是通过不断改变网络参数,使得参数能够对输入做各种非线性变换拟合输出,本质上就是一个函数去寻找最优解,只不过这个最优解是一个矩阵,而如何快速求得这个最优解是深度学习研究的一个重点,以经典的resnet-50为例,它大约有2000万个系数需要进行计算,那么我们如何计算出这么多系数,有以下两种方法:

- 第一种是直接暴力穷举一遍参数,这种方法从理论上行得通,但是实施上可能性基本为0,因为参数量过于庞大。

- 为了使求解参数过程更快,人们提出了第二种办法,即BP+优化器逼近求解。

Pytorch 提供的优化器

Pytorch很人性化的给我们提供了一个优化器的库torch.optim,在这里面提供了十种优化器。

- torch.optim.ASGD

- torch.optim.Adadelta

- torch.optim.Adagrad

- torch.optim.Adam

- torch.optim.AdamW

- torch.optim.Adamax

- torch.optim.LBFGS

- torch.optim.RMSprop

- torch.optim.Rprop

- torch.optim.SGD

- torch.optim.SparseAdam

而以上这些优化算法均继承于Optimizer,下面我们先来看下所有优化器的基类Optimizer。定义如下:

1 | |

Optimizer有三个属性:

defaults:存储的是优化器的超参数,例子如下:

1 | |

state:参数的缓存,例子如下:

1 | |

param_groups:管理的参数组,是一个list,其中每个元素是一个字典,顺序是params,lr,momentum,dampening,weight_decay,nesterov,例子如下:

1 | |

Optimizer还有以下的方法:

zero_grad():清空所管理参数的梯度,PyTorch的特性是张量的梯度不自动清零,因此每次反向传播后都需要清空梯度。

1 | |

step():执行一步梯度更新,参数更新

1 | |

add_param_group():添加参数组

1 | |

load_state_dict():加载状态参数字典,可以用来进行模型的断点续训练,继续上次的参数进行训练

1 | |

state_dict():获取优化器当前状态信息字典

1 | |

实际操作

构造参数更新环境变量

1 | |

梯度更新

为了使得 Loss 变小,梯度更新会沿着梯度的反方向以 lr 为步长更新

1 | |

梯度默认不清零,为了不影响后续操作,需要手动置零

从输出看,已经清空了

1 | |

优化器参数

optimizer 中的 params 保存了模型参数的引用(同一个对象),因此可以获得梯度信息

1 | |

向优化器添加参数

1 | |

查看优化器 state_dict 参数

1 | |

1 | |

保存、加载优化器参数

1 | |

注意:

- 每个优化器都是一个类,我们一定要进行实例化才能使用,比如下方实现:

1 | |

- optimizer在一个神经网络的epoch中需要实现下面两个步骤:

- 梯度置零

- 梯度更新

1 | |

- 给网络不同的层赋予不同的优化器参数。

1 | |

实验

为了更好的帮大家了解优化器,我们对PyTorch中的优化器进行了一个小测试

数据生成:

1 | |

数据分布曲线:

网络结构

1 | |

测试代码

1 | |

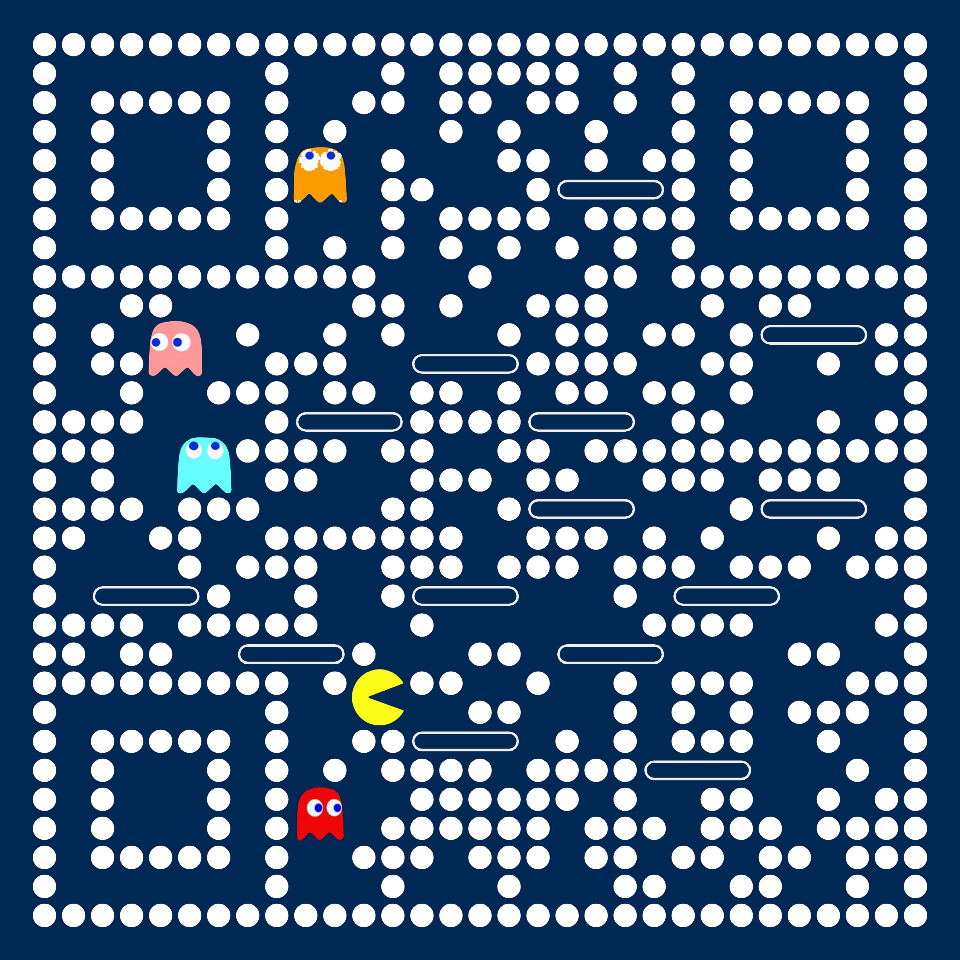

- 结果示意:

在上面的图片上,曲线下降的趋势和对应的steps代表了在这轮数据,模型下的收敛速度

注意: 优化器的选择是需要根据模型进行改变的,不存在绝对的好坏之分,我们需要多进行一些测试。

1 | |

参考资料

文章链接:

https://www.zywvvd.com/notes/study/deep-learning/pytorch/torch-learning/torch-learning-8/

“觉得不错的话,给点打赏吧 ୧(๑•̀⌄•́๑)૭”

微信支付

支付宝支付